“‘

You’re coming of age in a 24/7 media environment that bombards us with all kinds of content and exposes us to all kinds of arguments, some of which don’t always rank all that high on the truth meter,’ Obama said at Hampton University, Virginia. ‘With iPods and iPads and Xboxes and PlayStations, — none of which I know how to work — information becomes a distraction, a diversion, a form of entertainment, rather than a tool of empowerment, rather than the means of emancipation.“

Sigh. We’ve come a long way from “Dirt Off Your Shoulder.” In a commencement speech at Hampton University over the weekend, President Obama channels his inner grumpy-old-man (Roger Ebert?) to warn new grads about the perils of gaming and gadgetry. First off, it’s a ludicrous statement on its face: iPods are not particularly hard to work — and, if they’re really insidious Weapons of Mental Distraction, why give one to the Queen (who, by the way and to her credit, has fully embraced the Wii?)

Speaking more broadly, misinformation has been around as long as the republic — go read up on the Jefferson v. Adams race of 1800. If anything, today’s information society allows people to more easily hear the news from multiple sources, which is a very good thing. In fact, the reason our political culture these days is constantly bombarded with irrelevant, distracting, and inane mistruths has nothing — none, zip, zero — to do with iPods, iPads, Xboxes, or Playstations. It has to do with ABC, CBS, WaPo, Politico, and the rest of the Village, i.e. the very same people the President was noshing with a few weeks ago at the ne plus ultra of “information becoming distracting entertainment“, the White House Correspondents’ DInner.

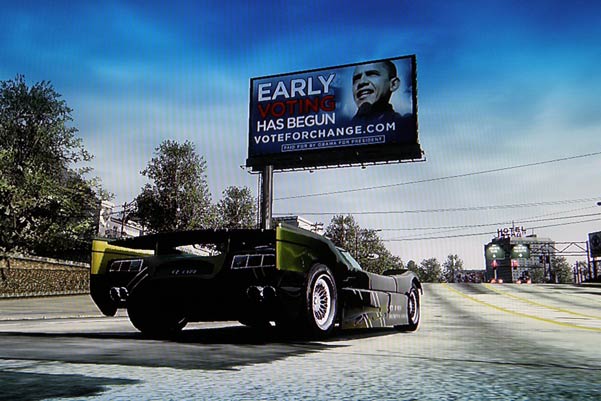

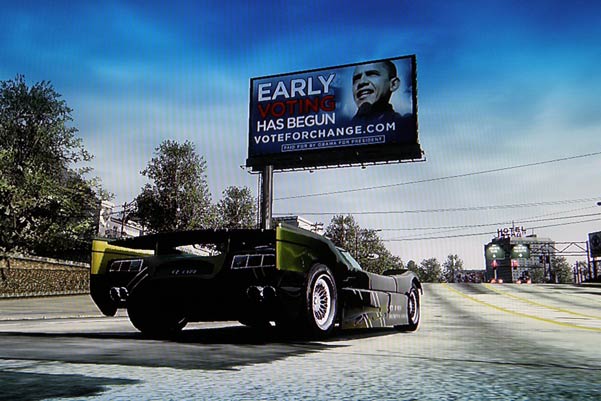

Finally, while the “multi-tasking is distracting” proposition does seem to hold water, scientifically speaking, the jury’s still out on the pernicious effects of Xbox’s and the like. In fact, there are plenty of studies suggesting that video games improve vision, improve reflexes, improve attention, improve cognition, improve memory, and improve “fluid intelligence,” a.k.a. problem-solving. So, let’s not get out the torches and pitchforks just yet. It could just be that the 21st-century interactive culture is making better, smarter, more informed citizens. (And, hey, let’s not forget Admongo.)

To get to the point, while it’s not as irritating as the concerned-centrist pearl-clutching over GTA in past years, it’s just a troubling dynamic to see not only a young, ostensibly Kennedyesque president but the most powerful man in the world tsk-tsking about all this new-fangled technology ruining the lives of the young people. Let’s try to stay ahead of the curve, please. And let’s keep focus on the many problems — lack of jobs, crushing student loan and/or credit card debts, etc. — that might be distracting new graduates right now more than their iPods and PS3s. (Also, try to pick up a copy of Stephen Duncombe’s Dream — Video game-style interactivity isn’t the enemy. It’s the future.)